Quantum computing has received significant attention as a next-generation computing technology due to its potential speed and ability to solve problems considered too difficult for classical computers, as reflected in the recent discussion on Quantum Supremacy. Grid sees quantum computing not only as a tool for solving optimization and quantum chemical computation problems, but also as a tool for AI (Machine Learning, Deep Learning, etc.) calculations, such as feature extraction.

Previous works have announced the successful implementation of machine learning-related algorithms, such as principal component analysis and auto-encoders, on quantum computers. This work announces the development of a gradient descent (backpropagation) algorithm, a method commonly used in machine learning for neural network parameter optimization, for use on NISQ quantum computers.

Due to the non-linear nature of quantum bits (qubits), Grid proposes that this algorithm can be used to perform the feature extraction and representation calculations that deep learning methods employ. Grid also sees the possibility of future performance gains accompanying an increase in the number of qubits.

Previous works have announced the successful implementation of machine learning-related algorithms, such as principal component analysis and auto-encoders, on quantum computers. This work announces the development of a gradient descent (backpropagation) algorithm, a method commonly used in machine learning for neural network parameter optimization, for use on NISQ quantum computers.

Due to the non-linear nature of quantum bits (qubits), Grid proposes that this algorithm can be used to perform the feature extraction and representation calculations that deep learning methods employ. Grid also sees the possibility of future performance gains accompanying an increase in the number of qubits.

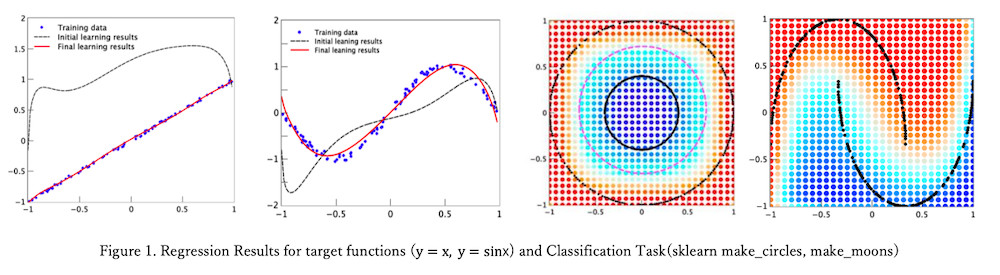

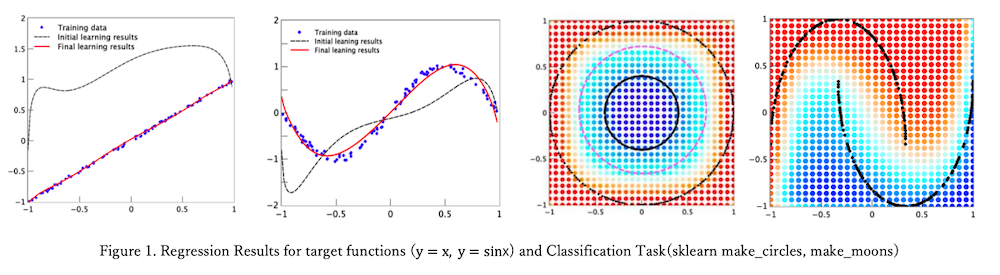

(Figure 1: Training Results for Regression and Classification Problems; from paper)

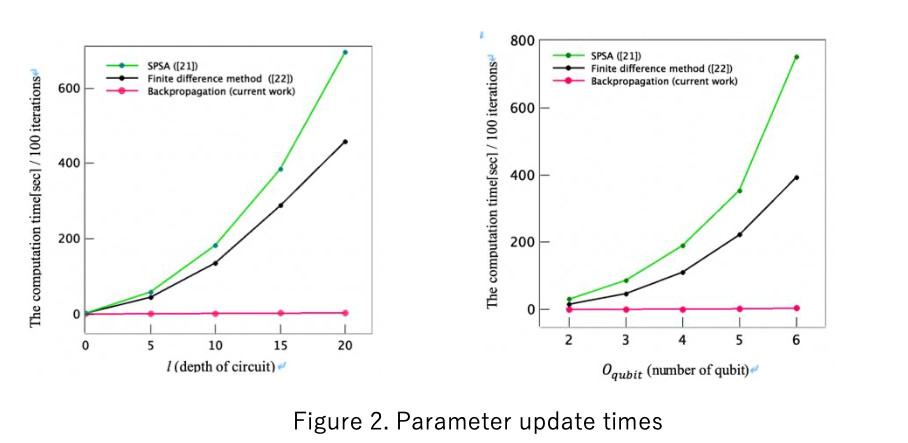

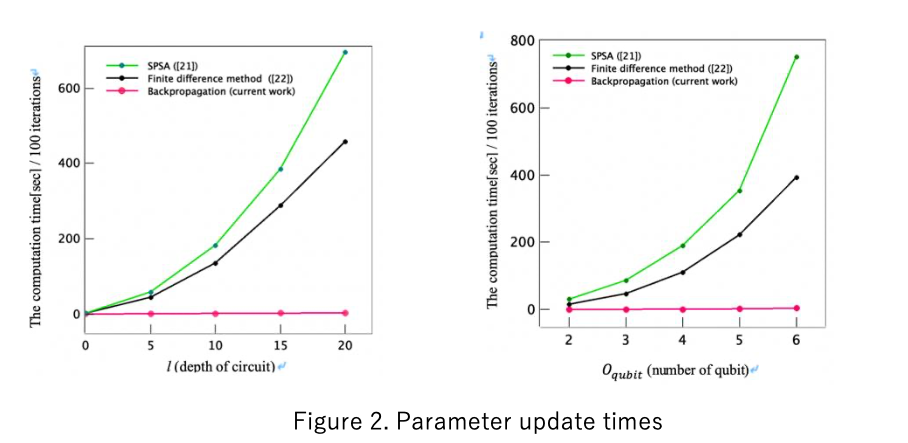

The algorithm proposed in this work significantly reduces the training time required for quantum machine learning parameter optimization in comparison to the conventional (finite difference) method. The paper compares the time required for parameter optimization during training for various circuit depths and number of qubits and confirms that the proposed algorithm is significantly faster than two existing methods.

Grid, Inc. will continue its research and development efforts on quantum computing algorithms with the goal of real-world implementation.

Grid, Inc. will continue its research and development efforts on quantum computing algorithms with the goal of real-world implementation.

(Figure 2: Comparison of Parameter Optimization times for Finite Difference and Gradient Descent methods; from paper)

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits